In this digital era, organizations are forced to make a trade off between faster time to market and a flawless user experience. The current goal for organizations is to run more tests, find bugs quickly, and release faster. In this white paper, we will discuss how AI is impacting software testing, current challenges with test automation, how AI can help resolve these challenges, how testers can embrace AI, and how the future of automation looks from the lens of AI.

What is artificial intelligence?

The terms artificial intelligence (AI), machine learning (ML), and deep learning (DL) are used synonymously but there are some differences.

- AI is an area of computer science that emphasizes the creation of intelligent machines that work and react like humans.

- ML is a subset of AI and evolved from the study of pattern recognition and computational learning theory (studying design and analysis of ML algorithms) in AI. It is a field of study that gives computers the ability to learn without being explicitly programmed.

- DL is one of the many approaches to ML. Other approaches include decision tree learning, inductive logic programming, clustering, and Bayesian networks. DL is based on neural networks of the human body. Each neuron keeps learning and interconnects with other neurons to perform different actions based on different responses

While the term AI has become prominent in the past few years, AI is not a new concept. The first research on AI was done in 1989 and there was even a video published on how Stanford did an experiment using AI for cars (autonomous cars).

Impact on software testing

The role of technology within our personal and professional lives continues to evolve at an exceptionally fast pace. From mobile apps that command home appliances to virtual reality, the digital revolution is expanding to encompass every aspect of the human experience. On a global scale, businesses are launching apps that are used by thousands, if not millions, of people. The majority of those businesses are developing apps using the agile rapid delivery framework, which equates to a new launch roughly every two weeks. Before each launch, those apps must be tested to ensure an optimal experience for the end-user. At that pace, manual testing is simply inadequate.

The time needed to complete the slew of required test cases directly conflicts with the fast pace driven by agile-like frameworks and continuous development. The exploration of alternative and superior testing methods, such as automation and AI, is now a necessity in order to keep pace and equip QA and test teams with augmented efficiencies. AI is showing great potential in identifying testing defects quickly and eliminating human intervention. Such advances have provided the capability to determine how a product will perform, both at the machine-level and data-server level. AI, just like automation tools, is going to aid in the overall testing effort. In the current era where the emphasis is on DevOps, CI/CD, integration, and continuous testing, AI can help to quicken these processes and make them more efficient.

Current challenges when transitioning to agile

The role of technology within our personal and professional lives continues to evolve at an exceptionally fast pace. From mobile apps that command home appliances to virtual reality, the digital revolution is expanding to cover every aspect of the human experience. On a global scale, businesses are launching apps that are used by thousands, if not millions, of people. The majority of those businesses are developing apps using the Agile rapid delivery framework, which equates to roughly a new launch every 2 weeks. Before each launch, those apps must be tested to ensure an optimal experience for the end-user. At that pace, manual testing is simply inadequate.

The time needed to complete the slew of required test cases directly conflicts with the fast pace driven by Agile-like frameworks and continuous development. The exploration of alternative and superior testing methods, such as automation and AI, is now a necessity in order to keep pace and equip QA and test teams with augmented efficiencies. AI is showing great potential in identifying testing defects quickly and eliminating human intervention. Such advances have provided the capability to determine how a product will perform, both at the machine-level and data-server level. AI just like automation tools is going to aid in the overall testing effort. In the current era where the emphasis is on DevOps, CI/CD Integration and Continuous Testing, AI can help to fasten this process and make them more efficient.

Impact on software testing

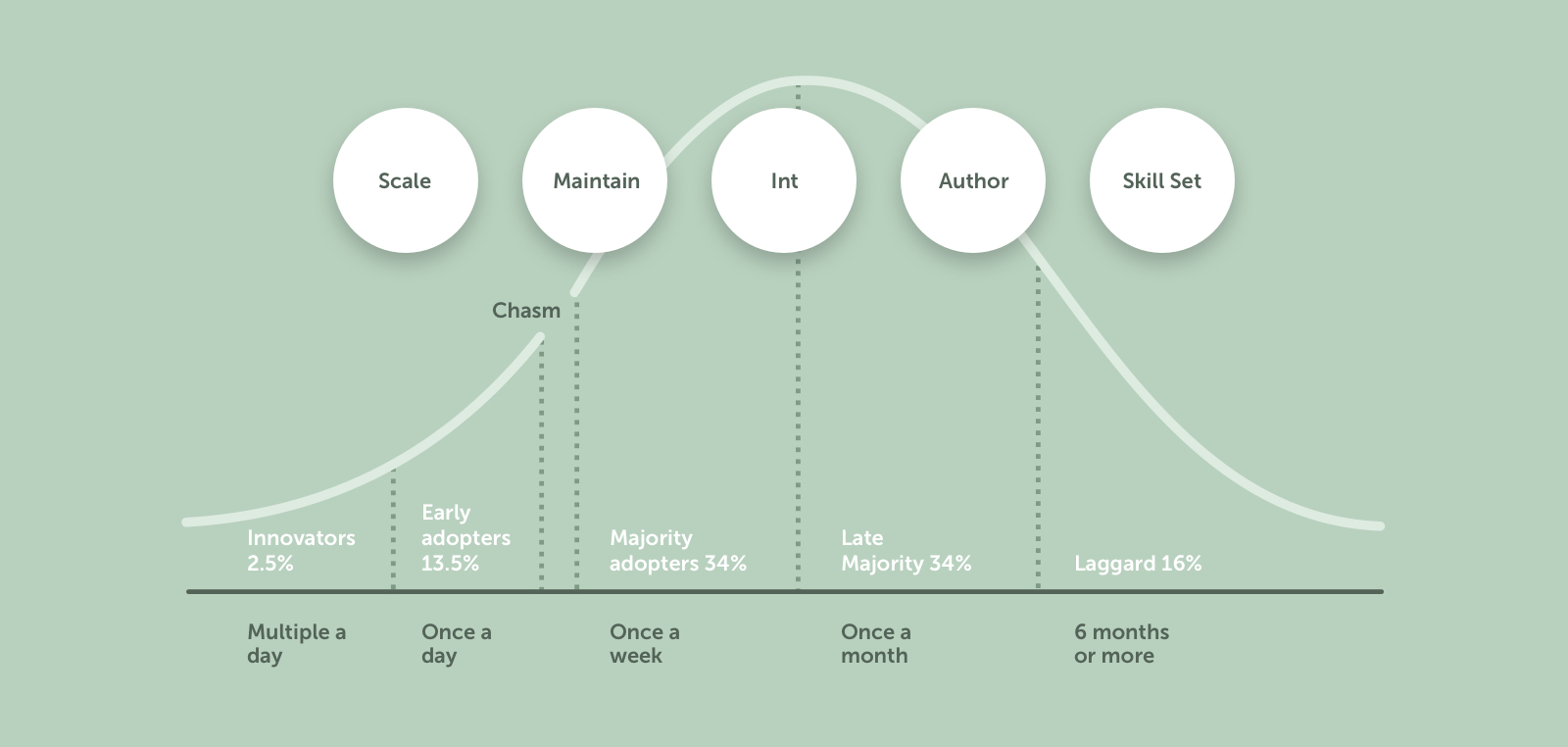

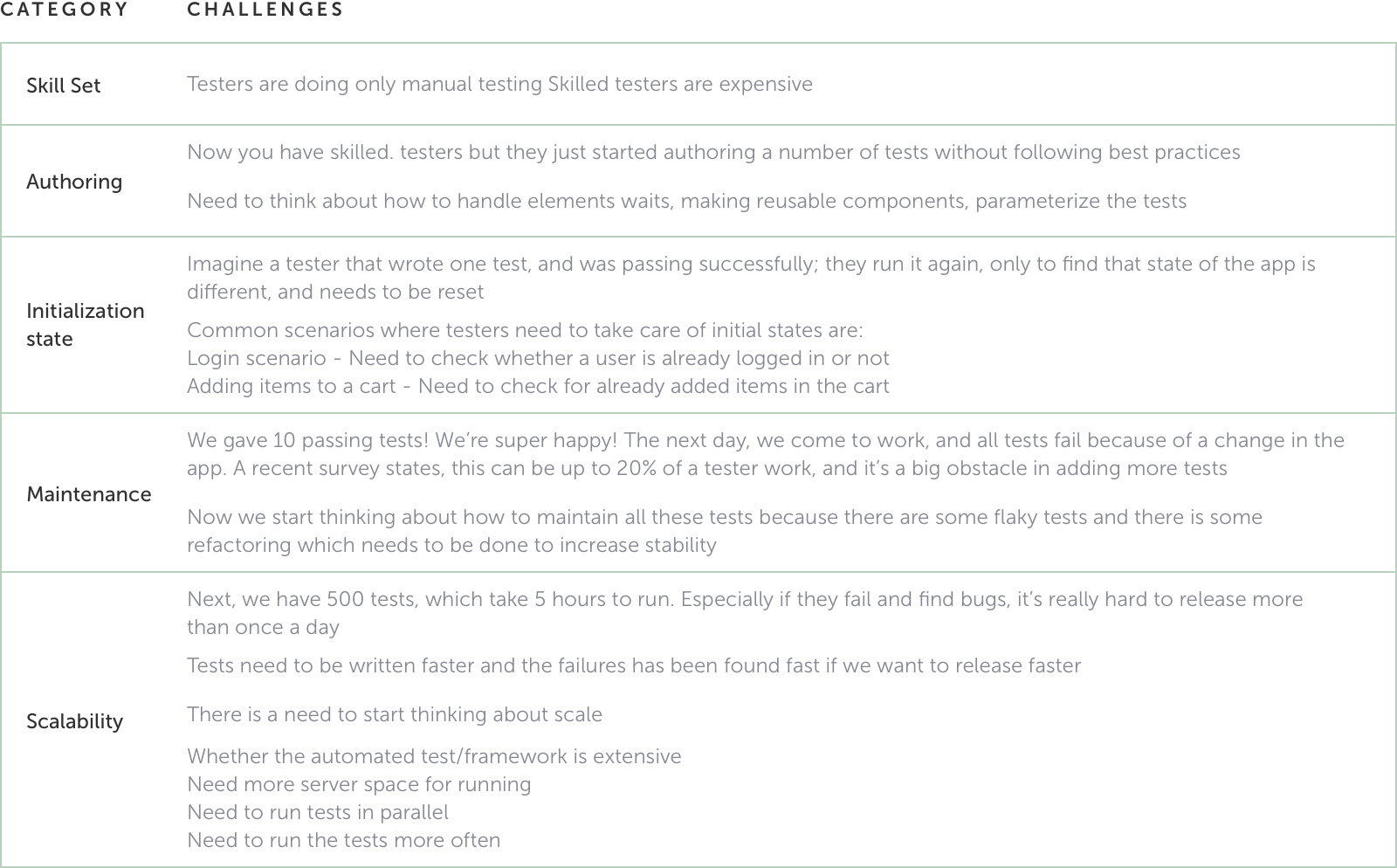

Let’s discuss the current challenges businesses face while transitioning to agile and how this transition affects release cycles. This will help you understand how AI can help solve some of these challenges.

How AI can solve some of these challenges

First, organizations should take testing seriously and have a dedicated QA team with set processes and standards. The most common mistake teams make is concentrate on developing the feature, and then, when it’s time to test, they get whoever is free during that time to test the software. Software testing is an art and a dedicated craft; we need curious testers with a great attitude and skeptical mindset to question the product and evaluate it without any biases.

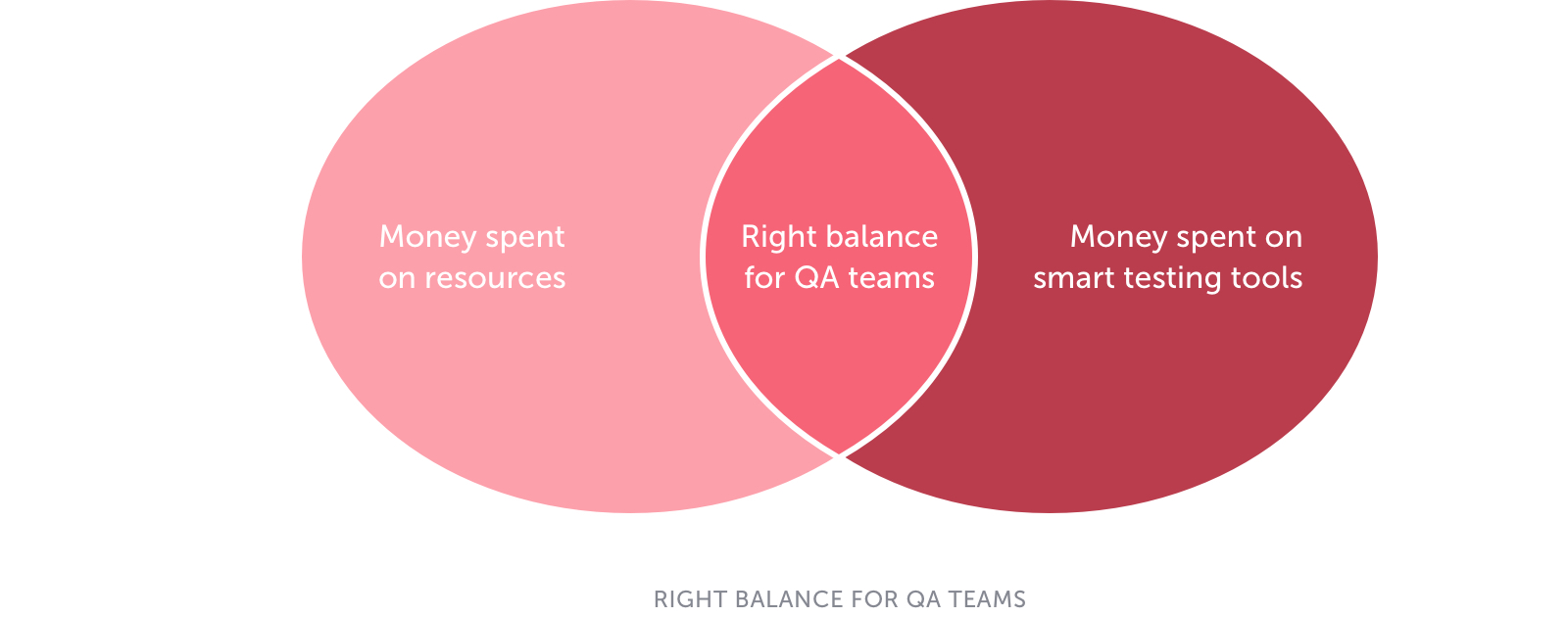

Second, forming a skilled dedicated QA team takes a lot of time, effort, and expense. There is a fine balance we need to maintain in terms of how much money we are going to invest in bringing in resources versus investing in a smart tool that can aid in testing. A good start would be to have two or three people dedicated to testing. They start simple by using the app and running through high-level scenarios using an AI-based tool. The more tests they run, the more data the AI gathers to learn and start building relationships related to the application under test (AUT).

Now that we have some tests authored the flows, the next challenge is applying best practices. Quite often, when authoring a test, teams do not pay attention to best practices such as giving adequate waits, parameterizing your tests, creating reusable components, and having single responsibility tests with low coupling. Also, testing starts late in the software development lifecycle (SDLC), making the cost of finding bugs even higher as a lot of re-work needs to be done when bugs are found late in the SDLC and this affects the timeline and release cycles. This is why we need to align with the “shift left paradigm” where testing should start in parallel with development as early as possible, right from the requirements phase and continuing all the way until release. This way, bugs can be found faster and the cost of fixing them is significantly lower than if they were found in later stages of the release cycle. While authoring these tests, we also need to pay attention to the initial states of the tests. For example, say we are writing a script to add items to a shopping cart. Every time we run the test, we need to ensure that the test is set to its initial state of having an empty cart before adding items. This prevents skewed results and is in line with good automation practices.

The next biggest problem with test automation is “maintenance” — As software becomes increasingly complex, we end up adding more tests. As a result, we have a plethora of tests to run and maintain. When tests fail, we need to spend a lot of time troubleshooting the failure and fixing it. A recent study conducted found that testers spend about 40 percent of their time just maintaining tests. Imagine what could happen if we reduce this to between one and five percent of a tester’s time. Wouldn’t that be amazing? Testers could then spend their valuable time actually testing the software instead of troubleshooting tests. This can be made a reality by using dynamic locators.

This is a strategy where the AI parses, in real time, multiple attributes of each and every element the user interacts within the application and creates a list of location strategies. So, even if an attribute of an element changes, the tests do not fail; instead the AI detects this problem and goes to the next best location strategy to successfully identify the element on the page. In this way, the tests are more stable, and as a result, the authoring and execution of tests are faster as well.

Finally, AI can help in overcoming the challenge of scale by finding bugs quickly and releasing faster through the self-healing mechanism, which is where the AI proactively identifies and solves problems before they even occur. In this way, our approach to testing automation is more proactive than reactive.

How can testers embrace AI?

AI has a proven an ability to function with more collective intelligence, speed, and scale than even the best-funded app teams. With continuous development setting an ever aggressive pace, combined with pressure from AI-inspired automation, robots, and chatbots, the question that looms in the minds of most likely all software testers is this: Are testing and QA teams under siege? Are QA roles in jeopardy of being phased out or replaced, as in the manufacturing industry?

Over the past decade, technologies have evolved drastically. There have been so many changes happening in the technology space, but one constant is human testers’ interaction with technologies and how we use them for our needs. The same holds true for AI. Additionally, to train the AI, we need good input/ output combinations (which we call a training dataset). So to work with modern software, we need to choose this training dataset carefully, as this is what the AI starts learning from creating relationships based on. Also, it is important to monitor how the AI is learning as we give it different training datasets. This is vital to how the software is going to be tested as well. So we would still need human involvement in training the AI.

Finally, while working with AI, it is important to ensure that the security, privacy, and ethical aspects of the software are not compromised. All these factors contribute to better testability of the software. We need humans for this too.

In summary, we will continue to do exploratory testing manually, but we will use AI to automate processes while we do this exploration. It is just like automation tools which do not replace manual testing but complement them.

So, contrary to popular beliefs, the outlook is not all doom and gloom; being a real, live human does have its advantages. For instance, human testers can improvise and test without written specifications, differentiate clarity from confusion, and sense when the look and feel of an on-screen component is “off” or seems wrong. The complete replacement of manual testers will only happen when AI exceeds those unique qualities of human intellect. There is a myriad of areas that will require in-depth testing to ensure the safety, security, and accuracy of all the data-driven technology and apps being created on a daily basis. In this regard, utilizing AI for software testing is still in its infancy with the potential for monumental impact.

Future of test automation

We have discussed various ways, AI will influence the field of software testing and help to solve some of the biggest challenges with test automation. In the future, the way we do test automation is going to change significantly in terms of taking a more risk-based approach to software testing.

AI has the ability to learn from different user flows and create test cases based on actual user data. We no longer have to spend a lot of time creating test data based on production users since the AI is doing it automatically for us. This helps to increase test coverage and makes the automated tests much more effective, as they have been created based on real user flows.

Replacing static locators with dynamic locators is going to make the tests more stable and, as a result of this, the authoring and execution of tests are going to be much faster. For example, say we have a “Checkout” button and we change its name to “Buy.” The tests that would have failed earlier due to the use of a single attribute to locate the element will no longer fail because we will start using dynamic locators and make use of multiple attributes for the same element. So, even if the name of the button changed, the AI will go to the next best attribute to locate the element on the page instead of failing the tests. This saves a considerable amount of time maintaining automated tests.

Also, the more tests the user runs, the more data the AI collects about the tests, and the more stable the tests become over a period of time. For example, based on the number of tests runs, the AI can start optimizing the wait times in tests to accommodate different page load times in the application.

Finally, test automation is not just a developer-focused task. Rather, everyone in the team can participate in writing automated tests since the authoring and execution of tests become really simple with the use of AI. Users can record tests on their own as well as use the tests that are automatically created by the AI to create effective automated test suites. In this way, even non-technical people can author and write effective tests.

Conclusion

With steady progress being made with AI, the truth remains that mimicking the human brain is no easy task. Apps are used by humans, and the technological innovations that are being created takes into account that human understanding, creativity, and contextualization are necessary traits to ensure a quality product. That said, manual testing remains essential and should compliment automation and AI. They are distinct and different functions that, instead of being compared, should be leveraged according to their respective strengths. Rather than AI solutions replacing QA teams, AI can augment software testing and infuse testers with super human-like efficiency.

What’s clear is that leaders in the technology industry will continue to dissolve boundaries and discover and innovate with machine learning and AI. As QA teams continue to embrace automation and welcome AI into their software testing practices, the outcomes will contribute to new solutions and ways of working, reinventing what’s possible.