Challenges with Open Source automation frameworks

If you have been in software test automation for more than a minute, you’ve probably heard of frameworks like Selenium or Puppeteer for browser and test automation. And, if you’ve worked with them, you know the time and skill required to author tests as well as their propensity for flakiness, false positives, and high maintenance. I don’t mean to disparage these frameworks as they can and have enabled some amazing advances in automated testing. Instead, I’ll discuss how alternative approaches address the two biggest bottlenecks to faster releases—slow authoring and flaky tests. And I’ll specifically focus on AI-augmented low code test automation.

Open your mind and suspend your disbelief for a few minutes as I present the case. I’ll limit this post to two primary areas of difference—authoring and stability. Other contrast areas, such as team administration, reporting, test execution, and test status, will have to wait for another day.

Before we talk about test authoring and stability, let’s start with some analyst data.

The market shift to AI-augmented testing

According to analyst firm Gartner1, “by 2025, 70% of enterprises will have implemented an active use of AI-augmented testing, up from 5% in 2021.” This is a massive increase, due in part to the maturity of AI-based low code automation tools and their ability to generate value for end-users. Gartner further adds that by 2025, “organizations that [don’t implement] AI-augmented testing, will spend twice as much time and effort on testing and defect remediation compared to their competitors that take advantage.”

There are many approaches to AI-augmented testing aimed at helping companies create the proper tests faster, update tests automatically, check for visual regressions, and more. How do you make sense of all of this? Where should you start?

Addressing the fast authoring challenge

Writing UI tests in code requires an understanding of the programming language as well as the specific syntax of the testing framework. These skills are harder to find and develop, and come at a premium over other testing or QA analysts. Your developers probably possess the programming knowledge, but they struggle to keep up with coding features, configuration, and unit testing.

Customers and prospects tell us it takes six to ten hours to code a stable end-to-end test on Selenium. Yet, they can write the same test in Testim in 20-30 minutes—over 90% time savings. Instead, customers use the time savings to accelerate test coverage, address more complex problems, and expand exploratory testing.

Testim’s authoring

How does Testim do this? First, a QA or business analyst can easily record a flow through the application under test. Testers configure validations (assertions) in the visual editor. Users easily create and share objects and groups across tests. Testim even enables auto-complete while authoring a test. It recognizes step patterns (e.g., a login process) and suggests replacing the steps with a shared group.

There’s no need to find and specify selectors to locate visual elements. Testim does it automatically with self-improving AI-powered locators.

If you are concerned about the lack of flexibility with a low-code tool, don’t be. Low code tools aren’t codeless; most offer the ability to customize any test with JavaScript.

Handling the stability challenge

Keeping your tests stable and avoiding false positives is a cascading problem. If you run your tests from your CI pipelines, these failures will stop your build, spin up fire drills to troubleshoot failures, then you’ll need to fix the test case and re-run it. If you’re lucky, you’ll solve only go through one or two of these cycles.

These false-positive failures are often the result of slight changes to the UI that didn’t get reflected in the UI tests. And, they occur at the worst time possible, as you are evaluating a release candidate.

How do low code solutions improve stability? Different approaches include using multiple attributes to locate an element, computer vision or self-learning locators, and techniques to strengthen synchronizing your AUT with your tests.

Testim’s stability

At Testim, test stability continues to be a top development priority. We start with AI-powered smart locators automatically created during the recording process. The algorithm evaluates hundreds of attributes within the HTML DOM, weighs them, and assigns a score to each element. If a few attributes change due to normal development activities, Testim will attempt to find the object using other attributes. This self-healing process means that when a developer changes the location, color, and text of an “add to cart,” Testim can still recognize that the new “Buy” button serves the same purpose and keep the functional test performing.

In addition, Testim recently released auto-improving locators. When incremental changes to the web application cause the elements to drift from their test baseline, Testim uses machine learning to automatically identify, test, and replace degraded locators with improved locators.

There are many more features that can add to stability and reduce maintenance included in Testim, including filtering and mapping of dynamic CSS, auto-grouping of reusable components, deduplication and refactoring of repeated steps, conditional waits, and more. And if you want to tinker with the locators, we trust you to know your application and enable you to make manual adjustments.

What about the value? When I talk to customers about test maintenance, they often say that it took between 30% and 40% of their QA team’s time to keep tests up to date when using one of these coded frameworks. When they shifted to Testim, they dropped that to 5%. That’s a savings of between 10 and 20 hours per person, per week!

Hopefully, by now, you have seen significant gains to be had by shifting to an AI-powered low code test automation platform. It’s at least worth a closer look.

Before I close this post, let’s talk about how visual testing is critical to assessing your application’s release readiness.

Functional and visual testing

Functional and end-to-end testing ensures that the application performs its designed functions accurately. Automated functional and end-to-end testing attempts to mimic how a person would interact with a web application.

Visual validation testing is more concerned with the appearance of the application. Does it include the correct branding elements, and does it represent the proper layout and appearance? Are there elements that interfere with the application’s functionality (e.g., a popup that hides a button)?

Functional and visual testing is necessary to fully test and validate your application’s usefulness. If elements of the application don’t present well, they can prevent a user from performing their required tasks. Misaligned visuals can also reflect poorly on your brand. Think of a website where text was overlapping making it difficult to read. Even if you were able to successfully complete your task, you likely lost confidence in the application. You don’t want your users questioning the quality of your application because it presents poorly.

Customers use Testim and Applitools to provide a more holistic approach to testing their web applications, both functionally and visually. Both mimic how real users interact with your application as they perform tasks. Their total experience goes beyond whether they exercise the functionality to finish a task, but to also include the ease of use, and feelings of quality that are impacted by the design and visual quality of your application.

Testim enhanced its integration with Applitools

Testim’s improved integration with Applitools and now supports the following:

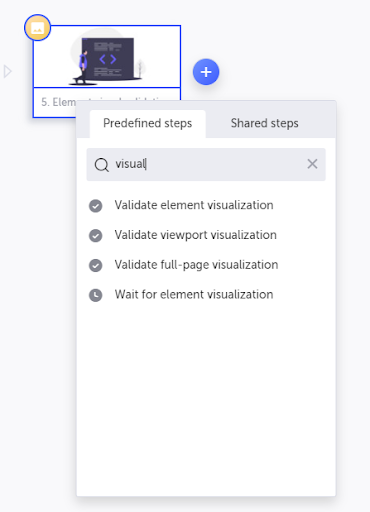

- Easily add Applitools visual validations to a Testim test step.

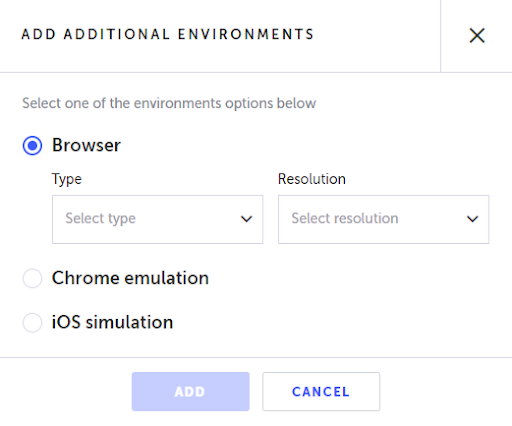

- Teams can send screenshots and the DOM to Applitools’ Ultrafast Test Cloud, enabling cross-browser visual comparisons.

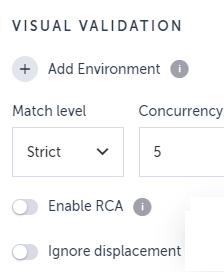

- Users can now select to ignore differences caused by element displacements.

- Root cause analysis (RCA) provides insights into the causes of visual mismatches reported by Eyes.

- Ease of use improvements includes choosing configuration options such as the match level and ignoring displacements directly from Testim’s UI—locally in the validation step or globally across multiple tests.

- Transfer branch names and labels from Testim to the relevant properties in Applitools.

- New support for multi-step tests, making grouping easier within Applitools.

- New support for cross-origin iFrames.

To try out this seamless integration between Testim and Applitools, you’ll need licenses for both Testim and Applitools. You can learn more about Testim and get a free account.

1Gartner Market Guide for AI-Augmented Software Testing Tools, 17 December 2021, ID G00744939, Joachim Herschmann, Thomas Murphy, Jim Scheibmeir